Highlights

为什么开发者需要更高效的编码工具?因为软件开发中最宝贵的不是代码,而是注意力。当你在修bug、写测试或重构代码时,每一次上下文切换都会消耗大量心智资源。OpenAI新推出的Codex正是针对这一痛点设计的。

Codex如何提升开发效率?它是基于o3模型优化的云端软件工程代理,可以并行处理多项任务。你可以同时部署多个代理来独立处理编码任务,如编写功能、回答代码库问题、修复bug和提交PR。每个任务都在单独的沙盒环境中运行,代理会提供可验证的证据,让你追踪每个步骤。

实际效果如何?Cisco、Temporal等早期测试者发现Codex最适合处理重复性、范围明确的任务。OpenAI自己的团队已将其融入日常工作流程,用于减少上下文切换、规划任务和处理后台工作。虽然目前还缺少一些功能,但这种异步多代理工作流很可能成为未来工程师生产高质量代码的标准方式。

Introducing Codex Codex 简介

A cloud-based software engineering agent that can work on many tasks in parallel, powered by codex-1. Available to ChatGPT Pro, Team and Enterprise users today, Plus soon.

一款基于云的软件工程代理,由 codex-1 提供支持,可以并行处理多项任务。今天已向 ChatGPT Pro、团队和企业用户开放,Plus 用户即将推出。

Try Codex (opens in a new window)

Today we’re launching a research preview of Codex: a cloud-based software engineering agent that can work on many tasks in parallel. Codex can perform tasks for you such as writing features, answering questions about your codebase, fixing bugs, and proposing pull requests for review; each task runs in its own cloud sandbox environment, preloaded with your repository.

今天,我们发布了 Codex 的研究预览版:这是一个基于云的软件工程代理,可以并行处理许多任务。Codex 可以为您执行诸如编写功能、回答有关代码库的问题、修复错误以及提出供审查的拉取请求等任务;每个任务都在其自己的云沙盒环境中运行,该环境预先加载了您的存储库。

Codex 由 codex-1 提供支持,它是针对软件工程优化的 OpenAI o3 版本。它使用强化学习在各种环境中的真实世界编码任务上进行训练,以生成与人类风格和 PR 偏好密切相关的代码,精确地遵循指令,并且可以迭代运行测试,直到收到通过结果。我们今天开始向 ChatGPT Pro、Enterprise 和 Team 用户推出 Codex,并很快支持 Plus 和 Edu 用户。

Codex is powered by codex-1, a version of OpenAI o3 optimized for software engineering. It was trained using reinforcement learning on real-world coding tasks in a variety of environments to generate code that closely mirrors human style and PR preferences, adheres precisely to instructions, and can iteratively run tests until it receives a passing result. We’re starting to roll out Codex to ChatGPT Pro, Enterprise, and Team users today, with support for Plus and Edu coming soon.

How Codex works Codex 的工作原理

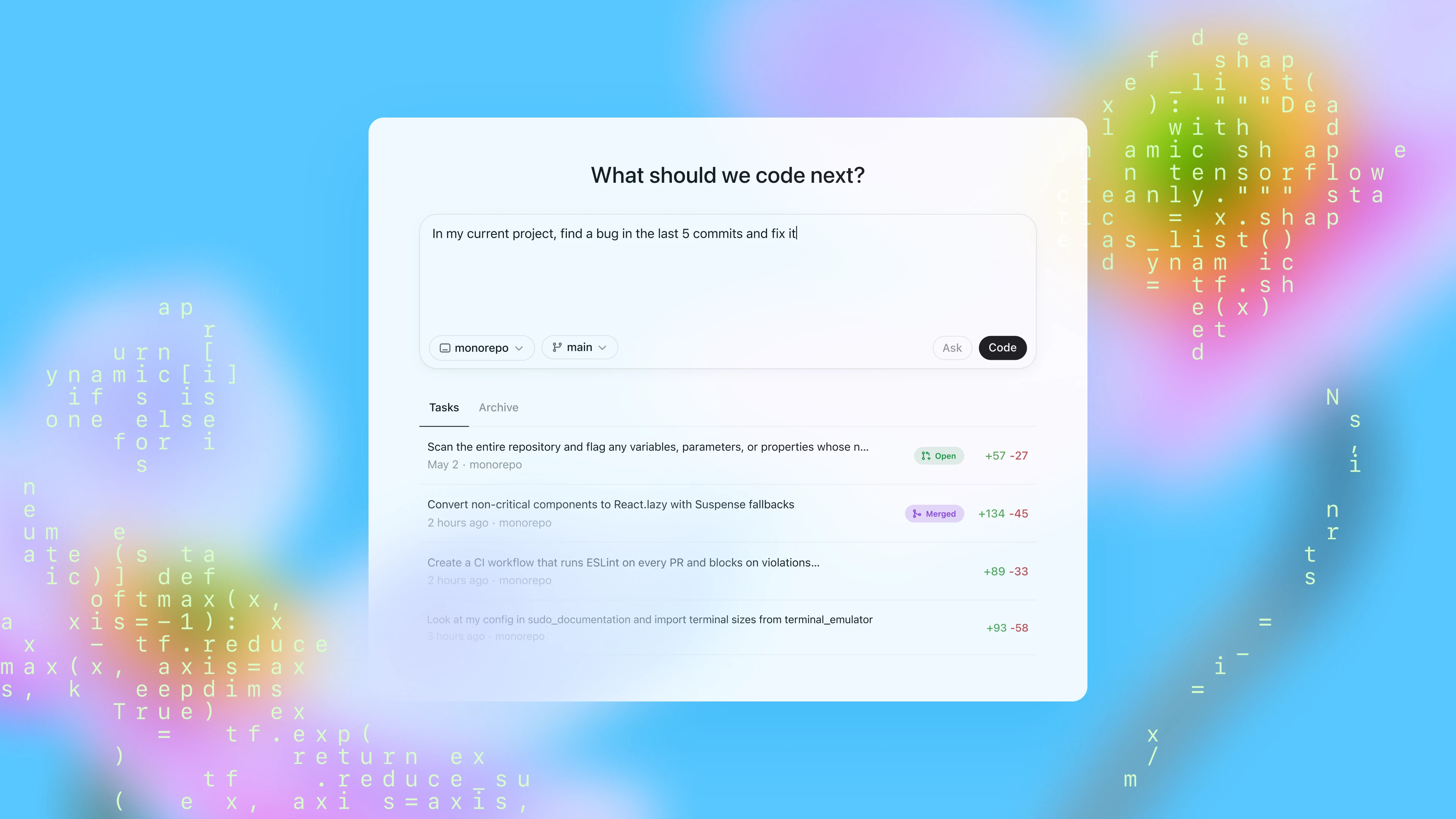

Today you can access Codex through the sidebar in ChatGPT and assign it new coding tasks by typing a prompt and clicking “Code”. If you want to ask Codex a question about your codebase, click “Ask”. Each task is processed independently in a separate, isolated environment preloaded with your codebase. Codex can read and edit files, as well as run commands including test harnesses, linters, and type checkers. Task completion typically takes between 1 and 30 minutes, depending on complexity, and you can monitor Codex’s progress in real time.

今天,您可以通过 ChatGPT 侧边栏访问 Codex,并通过键入提示并单击“Code”来为其分配新的编码任务。如果您想向 Codex 询问有关代码库的问题,请单击“Ask”。每个任务都在一个单独的、隔离的环境中独立处理,该环境预先加载了您的代码库。Codex 可以读取和编辑文件,以及运行包括测试工具、代码检查器和类型检查器在内的命令。任务完成通常需要 1 到 30 分钟,具体取决于复杂性,您可以实时监控 Codex 的进度。

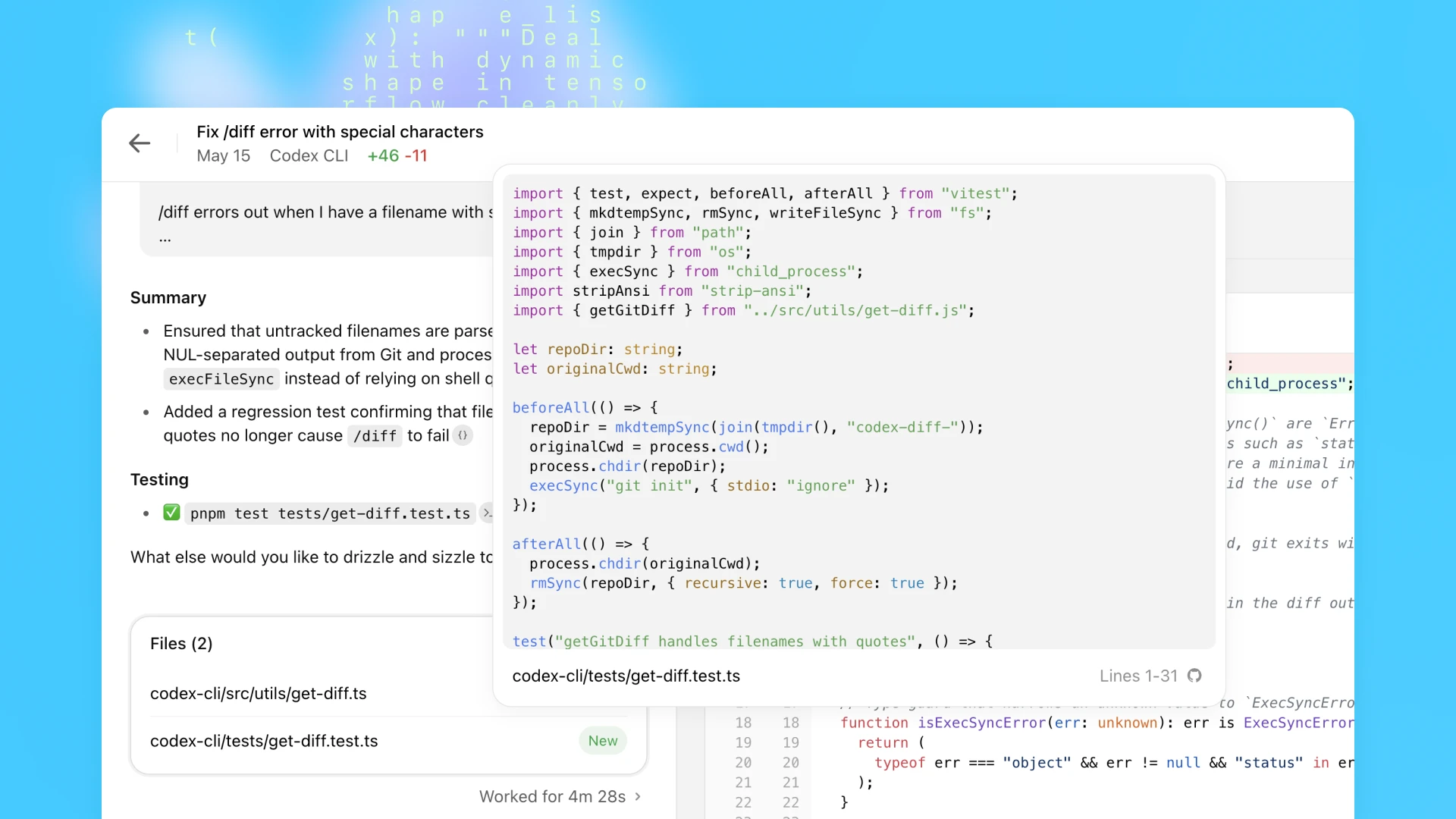

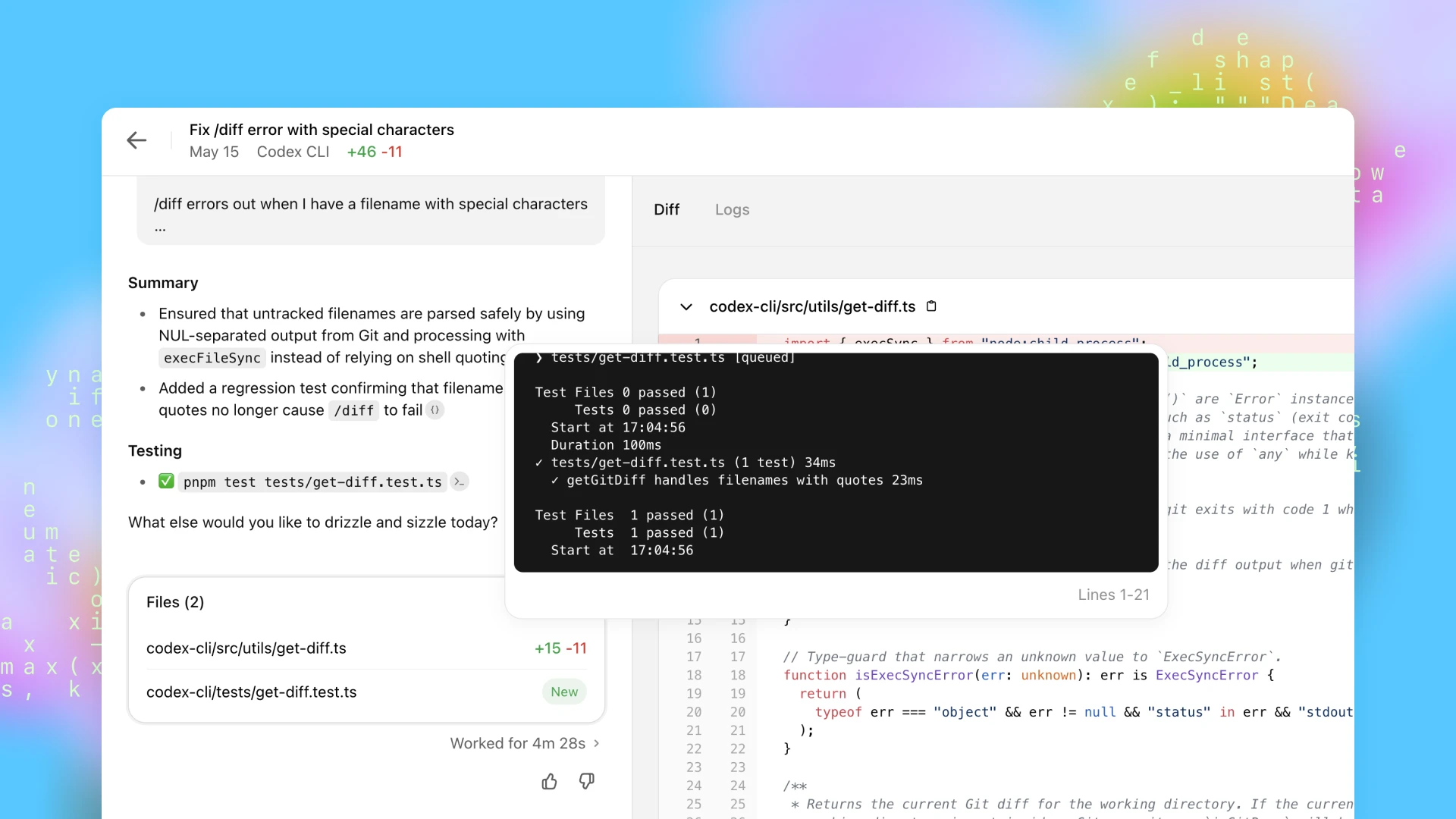

Once Codex completes a task, it commits its changes in its environment. Codex provides verifiable evidence of its actions through citations of terminal logs and test outputs, allowing you to trace each step taken during task completion. You can then review the results, request further revisions, open a GitHub pull request, or directly integrate the changes into your local environment. In the product, you can configure the Codex environment to match your real development environment as closely as possible.

Codex 完成任务后,会在其环境中提交更改。Codex 通过终端日志和测试输出的引用提供其操作的可验证证据,使您能够追踪任务完成期间所采取的每个步骤。然后,您可以查看结果,请求进一步的修改,打开 GitHub pull request,或直接将更改集成到您的本地环境中。在该产品中,您可以配置 Codex 环境,使其尽可能与您的实际开发环境相匹配。

[Interactive content (video player: “OpenAI Codex Log from OpenAI on Vimeo”) was embedded here. Due to platform restrictions, it cannot be displayed directly in this format.]

Original source: Vimeo

Codex can be guided by AGENTS.md files placed within your repository. These are text files, akin to README.md, where you can inform Codex how to navigate your codebase, which commands to run for testing, and how best to adhere to your project’s standard practices. Like human developers, Codex agents perform best when provided with configured dev environments, reliable testing setups, and clear documentation.

Codex 可以由放置在存储库中的 AGENTS.md 文件引导。这些是文本文件,类似于 README.md,您可以在其中告知 Codex 如何浏览您的代码库,运行哪些命令进行测试,以及如何最好地遵守项目的标准实践。与人类开发人员一样,当提供配置好的开发环境、可靠的测试设置和清晰的文档时,Codex 代理的表现最佳。

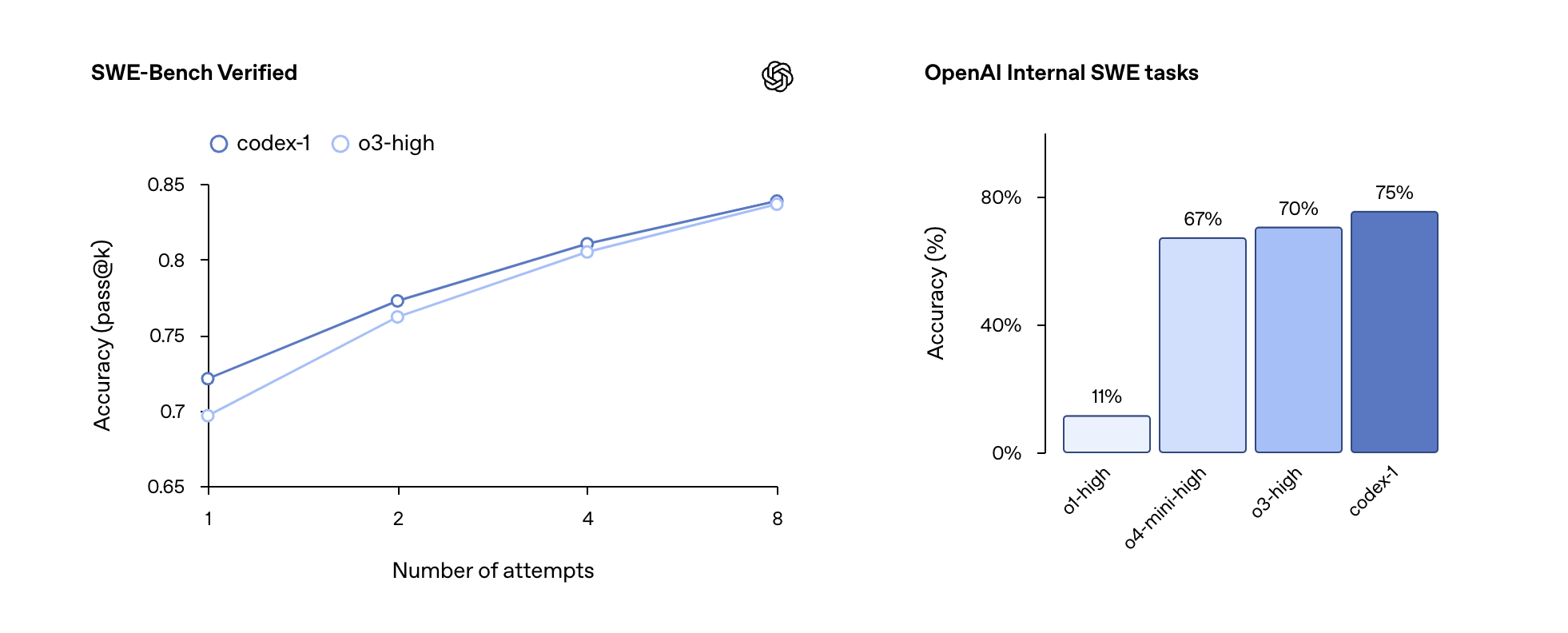

On coding evaluations and internal benchmarks, codex-1 shows strong performance even without AGENTS.md files or custom scaffolding.

在编码评估和内部基准测试中,即使没有 AGENTS.md 文件或自定义脚手架,codex-1 也表现出强大的性能。

23 SWE-Bench Verified samples that were not runnable on our internal infrastructure were excluded. codex-1 was tested at a maximum context length of 192k tokens and medium ‘reasoning effort’, which is the setting that will be available in product today. For details on o3 evaluations, see here.

由于有 23 个 SWE-Bench 验证样本无法在我们的内部基础设施上运行,因此被排除。codex-1 在最大上下文长度为 192k 个 token 和中等“推理工作量”下进行了测试,该设置是目前产品中可用的设置。有关 o3 评估的详细信息,请参见此处。

Our internal SWE task benchmark is a curated set of real-world internal SWE tasks at OpenAI.

我们的内部 SWE 任务基准是 OpenAI 精选的一组真实世界的内部 SWE 任务。

Building safe and trustworthy agents构建安全可靠的代理

We’re releasing Codex as a research preview, in line with our iterative deployment strategy. We prioritized security and transparency when designing Codex so users can verify its outputs – a safeguard that grows increasingly more important as AI models handle more complex coding tasks independently and safety considerations evolve. Users can check Codex’s work through citations, terminal logs and test results. When uncertain or faced with test failures, the Codex agent explicitly communicates these issues, enabling users to make informed decisions about how to proceed. It still remains essential for users to manually review and validate all agent-generated code before integration and execution.

我们正在根据我们的迭代部署策略发布 Codex 作为研究预览版。我们在设计 Codex 时优先考虑了安全性和透明度,以便用户可以验证其输出 – 随着 AI 模型独立处理更复杂的编码任务以及安全考虑因素不断发展,这种保障变得越来越重要。用户可以通过引用、终端日志和测试结果来检查 Codex 的工作。当不确定或遇到测试失败时,Codex 代理会明确地传达这些问题,使用户能够就如何继续做出明智的决定。在集成和执行之前,用户手动审查和验证所有代理生成的代码仍然至关重要。

Aligning to human preferences与人类偏好对齐

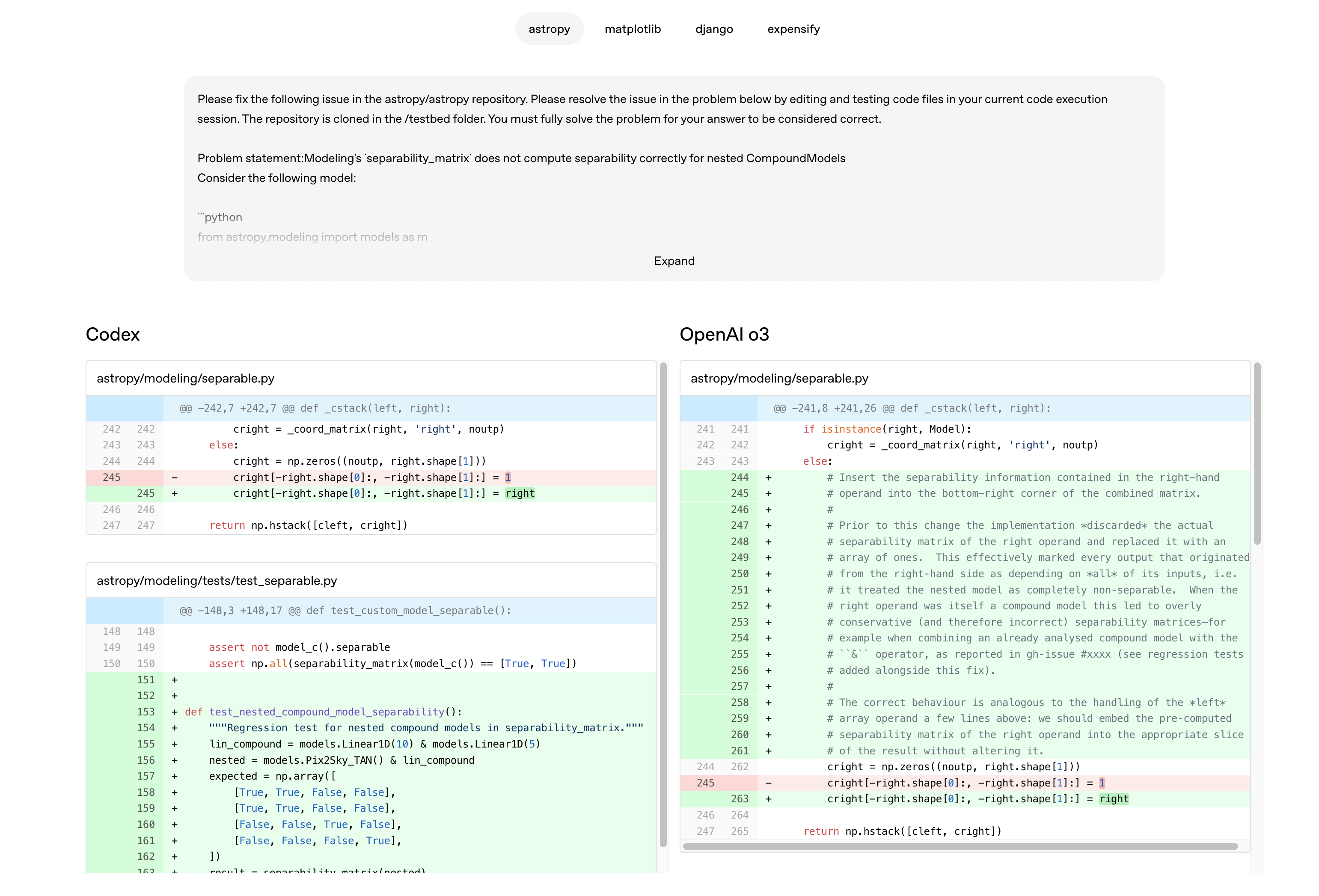

A primary goal while training codex-1 was to align outputs closely with human coding preferences and standards. Compared to OpenAI o3, codex-1 consistently produces cleaner patches ready for immediate human review and integration into standard workflows.

训练 codex-1 的一个主要目标是使输出与人类的编码偏好和标准紧密对齐。与 OpenAI o3 相比,codex-1 始终能生成更清晰的补丁,可供立即进行人工审核并集成到标准工作流程中。

Preventing abuse 防止滥用

Safeguarding against malicious applications of AI-driven software engineering, such as malware development, is increasingly critical. At the same time, it’s important that protective measures do not unduly hinder legitimate and beneficial applications that may involve techniques sometimes also used for malware development, such as low level kernel engineering.

保护措施以防止恶意应用程序利用人工智能驱动的软件工程(如恶意软件开发)变得越来越重要。与此同时,重要的是,保护措施不应过度阻碍可能涉及有时也用于恶意软件开发的技术(如底层内核工程)的合法和有益的应用程序。

To balance safety and utility, Codex was trained to identify and precisely refuse requests aimed at development of malicious software, while clearly distinguishing and supporting legitimate tasks. We’ve also enhanced our policy frameworks and incorporated rigorous safety evaluations to reinforce these boundaries effectively. We’ve published an addendum to the o3 System Card to reflect these evaluations.

为了平衡安全性和实用性,Codex 经过训练,能够识别并准确拒绝旨在开发恶意软件的请求,同时明确区分并支持合法的任务。我们还加强了我们的策略框架,并纳入了严格的安全评估,以有效地加强这些边界。我们发布了 o3 System Card的附录,以反映这些评估。

The Codex agent operates entirely within a secure, isolated container in the cloud. During task execution, internet access is disabled, limiting the agent’s interaction solely to the code explicitly provided via GitHub repositories and pre-installed dependencies configured by the user via a setup script. The agent cannot access external websites, APIs, or other services.

Codex 代理完全在云端安全、隔离的容器中运行。在任务执行期间,互联网访问被禁用,从而将代理的交互限制为仅通过 GitHub 存储库显式提供的代码以及用户通过设置脚本配置的预安装依赖项。该代理无法访问外部网站、API 或其他服务。

Early use cases 早期用例

Technical teams at OpenAI have started using Codex as part of their daily toolkit. It is most often used by OpenAI engineers to offload repetitive, well-scoped tasks, like refactoring, renaming, and writing tests, that would otherwise break focus. It’s equally useful for scaffolding new features, wiring components, fixing bugs, and drafting documentation. Teams are building new habits around it: triaging on-call issues, planning tasks at the start of the day, and offloading background work to keep moving. By reducing context-switching and surfacing forgotten to-dos, Codex helps engineers ship faster and stay focused on what matters most.

OpenAI 的技术团队已开始使用 Codex 作为其日常工具包的一部分。 OpenAI 工程师最常使用它来分担重复性的、范围明确的任务,例如重构、重命名和编写测试,否则这些任务会分散注意力。 它同样适用于搭建新功能、连接组件、修复错误和起草文档。 团队正在围绕它建立新的习惯:对紧急问题进行分类、在一天开始时规划任务,以及分担后台工作以保持进展。 通过减少上下文切换和浮现遗忘的待办事项,Codex 帮助工程师更快地交付产品,并专注于最重要的事情。

[Interactive content (video player: “OpenAI Codex On Call Triage Demo Video from OpenAI on Vimeo”) was embedded here. Due to platform restrictions, it cannot be displayed directly in this format.]

Original source: Vimeo

00:00 02:14

[Interactive content (video player: ” from OpenAI on Vimeo”) was embedded here. Due to platform restrictions, it cannot be displayed directly in this format.]

Original source: Vimeo

00:00 00:00

[Interactive content (video player: ” from OpenAI on Vimeo”) was embedded here. Due to platform restrictions, it cannot be displayed directly in this format.]

Original source: Vimeo

00:00 00:00

Leading up to release, we’ve also been working with a small group of external testers to better understand how Codex performs across diverse codebases, development processes, and teams.

在发布之前,我们还与一小群外部测试人员合作,以更好地了解 Codex 在不同的代码库、开发流程和团队中的表现。

-

Cisco (opens in a new window) is exploring how Codex can help their engineering teams bring ambitious ideas to life faster. As early design partners, Cisco is helping shape the future of Codex by evaluating it for real-world use cases across their product portfolio and providing feedback to the OpenAI team.

Cisco 正在探索 Codex 如何帮助其工程团队更快地将雄心勃勃的想法变为现实。作为早期的设计合作伙伴,Cisco 正在通过评估 Codex 在其产品组合中的实际用例,并向 OpenAI 团队提供反馈,从而帮助塑造 Codex 的未来。

-

Temporal (opens in a new window) uses Codex to accelerate feature development, debug issues, write and execute tests, and refactor large codebases. It also helps them stay focused by running complex tasks in the background—keeping engineers in flow while speeding up iteration.

Temporal 使用 Codex 来加速功能开发、调试问题、编写和执行测试以及重构大型代码库。它还可以通过在后台运行复杂任务来帮助他们保持专注——让工程师保持工作状态,同时加快迭代速度。

-

Superhuman (opens in a new window) uses Codex to speed up small but repetitive tasks like improving test coverage and fixing integration failures. It also helps them ship faster by enabling product managers to contribute lightweight code changes without pulling in an engineer, except for code review.

Superhuman 使用 Codex 来加速处理小型但重复性的任务,例如提高测试覆盖率和修复集成故障。它还能让产品经理在无需工程师参与的情况下(代码审查除外)贡献轻量级的代码更改,从而帮助他们更快地交付产品。

-

Kodiak (opens in a new window) is using Codex to help write debugging tools, improve test coverage, and refactor code—accelerating development of the Kodiak Driver, their autonomous driving technology. Codex has also become a valuable reference tool, helping engineers understand unfamiliar parts of the stack by surfacing relevant context and past changes.

Kodiak 正在使用 Codex 来帮助编写调试工具、提高测试覆盖率和重构代码,从而加速其自动驾驶技术 Kodiak Driver 的开发。Codex 也已成为一个有价值的参考工具,通过呈现相关的上下文和过去的更改,帮助工程师理解不熟悉的堆栈部分。

Based on learnings from early testers, we recommend assigning well-scoped tasks to multiple agents simultaneously, and experimenting with different types of tasks and prompts to explore the model’s capabilities effectively.

根据早期测试者的经验,我们建议同时将范围明确的任务分配给多个代理,并尝试不同类型的任务和提示,以有效地探索模型的功能。

Updates to Codex CLI Codex CLI 更新

Last month, we launched Codex CLI, a lightweight open-source coding agent that runs in your terminal. It brings the power of models like o3 and o4-mini into your local workflow, making it easy to pair with them to complete tasks faster.

上个月,我们发布了 Codex CLI,这是一个轻量级的开源编码代理,可以在您的终端中运行。它将 o3 和 o4-mini 等模型的功能带入您的本地工作流程,使您可以轻松地与它们配对,从而更快地完成任务。

Today, we’re also releasing a smaller version of codex-1, a version of o4-mini designed specifically for use in Codex CLI. This new model supports faster workflows in the CLI and is optimized for low-latency code Q&A and editing, while retaining the same strengths in instruction following and style. It’s available now as the default model in Codex CLI and in the API as codex-mini-latest. The underlying snapshot will be regularly updated as we continue to improve the Codex-mini model.

今天,我们还将发布一个较小版本的 codex-1,即专门为在 Codex CLI 中使用的 o4-mini 版本。这个新模型支持 CLI 中更快速的工作流程,并针对低延迟代码问答和编辑进行了优化,同时保留了在指令遵循和风格方面的相同优势。它现在可以作为 Codex CLI 中的默认模型以及 API 中的 codex-mini-latest 使用。当我们不断改进 Codex-mini 模型时,底层快照将定期更新。

We’re also making it much easier to connect your developer account to Codex CLI. Instead of manually generating and configuring an API token, you can now sign in with your ChatGPT account and select the API organization you want to use. We’ll automatically generate and configure the API key for you. Plus and Pro users who sign in to Codex CLI with ChatGPT can also begin redeeming $5 and $50 in free API credits, respectively, later today for the next 30 days.

我们也在让您的开发者帐户更容易连接到 Codex CLI。您现在可以使用您的 ChatGPT 帐户登录并选择您想要使用的 API 组织,而无需手动生成和配置 API 令牌。我们将自动为您生成和配置 API 密钥。此外,Plus 和 Pro 用户使用 ChatGPT 登录 Codex CLI 后,也可以在今天晚些时候开始兑换价值 5 美元和 50 美元的免费 API 额度,有效期为未来 30 天。

Codex availability, pricing, and limitationsCodex 可用性、定价和限制

Starting today, we’re rolling out Codex to ChatGPT Pro, Enterprise, and Team users globally, with support for Plus and Edu coming soon. Users will have generous access at no additional cost for the coming weeks so you can explore what Codex can do, after which we’ll roll out rate-limited access and flexible pricing options that let you purchase additional usage on-demand. We plan to expand access to Plus and Edu users soon.

从今天开始,我们将向全球 ChatGPT Pro、Enterprise 和 Team 用户推出 Codex,并即将支持 Plus 和 Edu 用户。在接下来的几周内,用户可以免费获得大量访问权限,以便您可以探索 Codex 的功能,之后我们将推出速率限制的访问权限和灵活的定价选项,让您可以按需购买额外的使用量。我们计划很快扩大对 Plus 和 Edu 用户的访问权限。

For developers building with codex-mini-latest, the model is available on the Responses API and priced at $1.50 per 1M input tokens and $6 per 1M output tokens, with a 75% prompt caching discount.

对于使用 codex-mini-latest 构建的开发者,该模型可在 Responses API 上使用,定价为每 100 万个输入 token 1.50 美元,每 100 万个输出 token 6 美元,并提供 75%的提示缓存折扣。

Codex is still early in its development. As a research preview, it currently lacks features like image inputs for frontend work, and the ability to course-correct the agent while it’s working. Additionally, delegating to a remote agent takes longer than interactive editing, which can take some getting used to. Over time, interacting with Codex agents will increasingly resemble asynchronous collaboration with colleagues. As model capabilities advance, we anticipate agents handling more complex tasks over extended periods.

Codex 仍处于早期开发阶段。作为研究预览版,它目前缺少一些功能,如用于前端工作的图像输入,以及在代理工作时纠正其方向的能力。此外,委托给远程代理比交互式编辑花费的时间更长,这可能需要一些时间来适应。随着时间的推移,与 Codex 代理的交互将越来越类似于与同事的异步协作。随着模型能力的提高,我们预计代理将在更长的时间内处理更复杂的任务。

What’s next 下一步是什么

We imagine a future where developers drive the work they want to own and delegate the rest to agents—moving faster and being more productive with AI. To achieve that, we’re building a suite of Codex tools that support both real-time collaboration and asynchronous delegation.

我们设想的未来是,开发者能够主导自己想做的工作,并将剩余的工作委托给代理——通过 AI 更快地行动并提高生产力。为了实现这一目标,我们正在构建一套 Codex 工具,以支持实时协作和异步委托。

Pairing with AI tools like Codex CLI and others has quickly become an industry norm, helping developers move faster as they code. But we believe the asynchronous, multi-agent workflow introduced by Codex in ChatGPT will become the de facto way engineers produce high-quality code.

与 Codex CLI 等 AI 工具配对已迅速成为行业规范,帮助开发者在编码时更快地行动。但我们相信 ChatGPT 中 Codex 引入的异步多代理工作流将成为工程师生成高质量代码的事实标准。

Ultimately, we see these two modes of interaction—real-time pairing and task delegation—converging. Developers will collaborate with AI agents across their IDEs and everyday tools to ask questions, get suggestions, and offload longer tasks, all in a unified workflow.

最终,我们看到这两种交互模式——实时配对和任务委派——正在融合。开发者将在他们的 IDE 和日常工具中与 AI Agent 协作,以提出问题、获得建议和卸载更长的任务,所有这些都在一个统一的工作流程中。

Looking ahead, we plan to introduce more interactive and flexible agent workflows. Developers will soon be able to provide guidance mid-task, collaborate on implementation strategies, and receive proactive progress updates. We also envision deeper integrations across the tools you already use: today Codex connects with GitHub, and soon you’ll be able to assign tasks from Codex CLI, ChatGPT Desktop, or even tools such as your issue tracker or CI system.

展望未来,我们计划引入更具互动性和灵活性的代理工作流程。开发者将很快能够在任务中期提供指导、协作制定实施策略并接收主动进度更新。我们还设想在您已使用的工具之间进行更深入的集成:今天 Codex 连接 GitHub,很快您将能够从 Codex CLI、ChatGPT Desktop 甚至诸如您的问题跟踪器或 CI 系统之类的工具中分配任务。

Software engineering is one of the first industries to experience significant AI-driven productivity gains, opening new possibilities for individuals and small teams. While we’re optimistic about these gains, we’re also collaborating with partners to better understand the implications of widespread agent adoption on developer workflows, skill development across people, skill levels, and geographies.

软件工程是率先体验到人工智能驱动的显著生产力提升的行业之一,为个人和小型团队开启了新的可能性。尽管我们对这些提升感到乐观,但我们也在与合作伙伴协作,以更好地了解代理的广泛采用对开发者工作流程、跨人员的技能发展、技能水平和地域的影响。

This is just the beginning—and we’re excited to see what you build with Codex.

这仅仅是个开始——我们很高兴看到你用 Codex 构建什么。

Livestream replay 直播回放

[Interactive content (video player: “A research preview of Codex in ChatGPT”) was embedded here. Due to platform restrictions, it cannot be displayed directly in this format.]

Original source: YouTube

Appendix 附录

System message 系统消息

We are sharing the codex-1 system message to help developers understand the model’s default behavior and tailor Codex to work effectively in custom workflows. For example, the codex-1 system message encourages Codex to run all tests mentioned in the AGENTS.md file, but if you’re short on time, you can ask Codex to skip these tests.

我们正在分享 codex-1 系统消息,以帮助开发人员了解模型的默认行为,并定制 Codex 以使其在自定义工作流程中有效工作。例如,codex-1 系统消息鼓励 Codex 运行 AGENTS.md 文件中提到的所有测试,但如果时间紧迫,您可以要求 Codex 跳过这些测试。

# Instructions

- The user will provide a task.

- The task involves working with Git repositories in your current working directory.

- Wait for all terminal commands to be completed (or terminate them) before finishing.

# Git instructions

If completing the user's task requires writing or modifying files:

- Do not create new branches.

- Use git to commit your changes.

- If pre-commit fails, fix issues and retry.

- Check git status to confirm your commit. You must leave your worktree in a clean state.

- Only committed code will be evaluated.

- Do not modify or amend existing commits.

# AGENTS.md spec

- Containers often contain AGENTS.md files. These files can appear anywhere in the container's filesystem. Typical locations include `/`, `~`, and in various places inside of Git repos.

- These files are a way for humans to give you (the agent) instructions or tips for working within the container.

- Some examples might be: coding conventions, info about how code is organized, or instructions for how to run or test code.

- AGENTS.md files may provide instructions about PR messages (messages attached to a GitHub Pull Request produced by the agent, describing the PR). These instructions should be respected.

- Instructions in AGENTS.md files:

- The scope of an AGENTS.md file is the entire directory tree rooted at the folder that contains it.

- For every file you touch in the final patch, you must obey instructions in any AGENTS.md file whose scope includes that file.

- Instructions about code style, structure, naming, etc. apply only to code within the AGENTS.md file's scope, unless the file states otherwise.

- More-deeply-nested AGENTS.md files take precedence in the case of conflicting instructions.

- Direct system/developer/user instructions (as part of a prompt) take precedence over AGENTS.md instructions.

- AGENTS.md files need not live only in Git repos. For example, you may find one in your home directory.

- If the AGENTS.md includes programmatic checks to verify your work, you MUST run all of them and make a best effort to validate that the checks pass AFTER all code changes have been made.

- This applies even for changes that appear simple, i.e. documentation. You still must run all of the programmatic checks.

# Citations instructions

- If you browsed files or used terminal commands, you must add citations to the final response (not the body of the PR message) where relevant. Citations reference file paths and terminal outputs with the following formats:

1) `【F:<file_path>†L<line_start>(-L<line_end>)?】`

- File path citations must start with `F:`. `file_path` is the exact file path of the file relative to the root of the repository that contains the relevant text.

- `line_start` is the 1-indexed start line number of the relevant output within that file.

2) `【<chunk_id>†L<line_start>(-L<line_end>)?】`

- Where `chunk_id` is the chunk_id of the terminal output, `line_start` and `line_end` are the 1-indexed start and end line numbers of the relevant output within that chunk.

- Line ends are optional, and if not provided, line end is the same as line start, so only 1 line is cited.

- Ensure that the line numbers are correct, and that the cited file paths or terminal outputs are directly relevant to the word or clause before the citation.

- Do not cite completely empty lines inside the chunk, only cite lines that have content.

- Only cite from file paths and terminal outputs, DO NOT cite from previous pr diffs and comments, nor cite git hashes as chunk ids.

- Use file path citations that reference any code changes, documentation or files, and use terminal citations only for relevant terminal output.

- Prefer file citations over terminal citations unless the terminal output is directly relevant to the clauses before the citation, i.e. clauses on test results.

- For PR creation tasks, use file citations when referring to code changes in the summary section of your final response, and terminal citations in the testing section.

- For question-answering tasks, you should only use terminal citations if you need to programmatically verify an answer (i.e. counting lines of code). Otherwise, use file citations.